What type of processor is a GPU?

What type of processor is a GPU: The abbreviation “GPU” is a common term in the computer industry, especially when talking about graphics processing units (GPUs), artificial intelligence (AI), and video games. What is a graphics processing unit (GPU) and what type of CPU is it part of? Come, we are going to explore this interesting part of the computer.

Introduction:

Understanding the purpose and role of a GPU is the first step in understanding the types of processors. Graphics Processing Unit is called GPU for short. Dedicated graphics processing units (GPUs) are a significant improvement over traditional central processing units (CPUs), which are responsible for general purpose computing.

Understanding the Architecture:

There are several important differences between CPU and GPU in terms of architecture. Graphics processing units (GPUs) contain thousands of smaller, more efficient cores designed for parallel computing, unlike central processing units (CPUs), which typically contain a handful of stronger cores for sequential processing.

Graphics processing units (GPUs) are perfect for tasks like 3D visual rendering, video playback, and accelerating machine learning algorithms due to their parallel architecture, which allows them to manage large amounts of data simultaneously.

Gaining a comprehensive understanding of a GPU’s design is essential to fully utilize its unique capabilities and performance. Graphics processing units (GPUs) are highly efficient at handling large amounts of data simultaneously, unlike traditional CPUs, which are specialized for sequential processing.

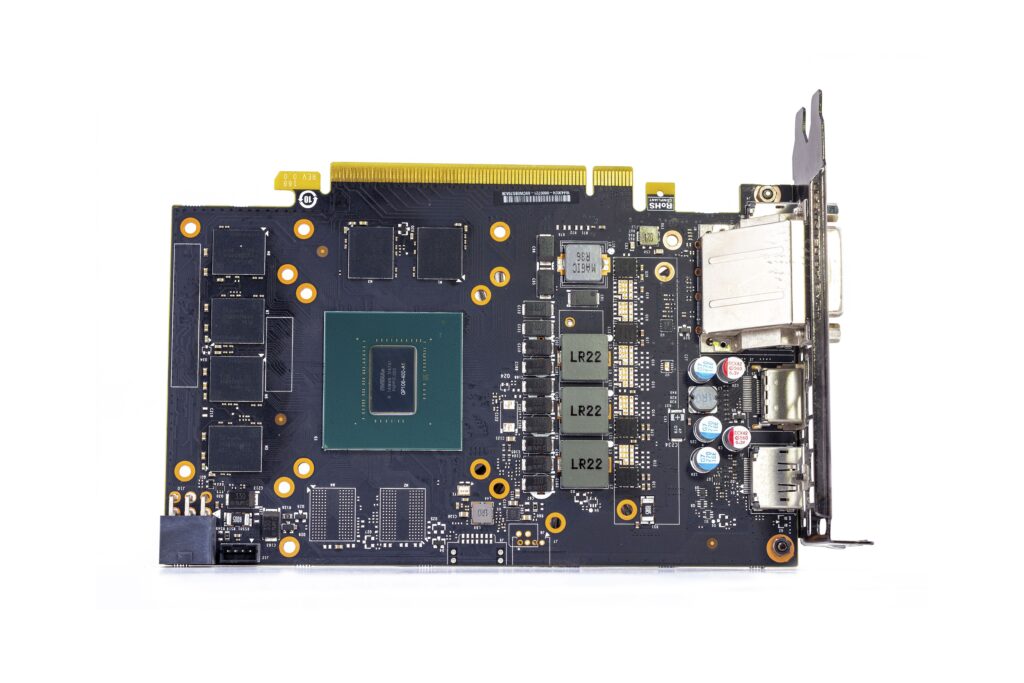

A graphics processing unit (GPU) relies on a cluster or streaming multiprocessor (SM) to organize its thousands of small processing cores. The GPU can perform complex calculations at incredible speed thanks to its cores, which are intended to execute instructions in parallel.

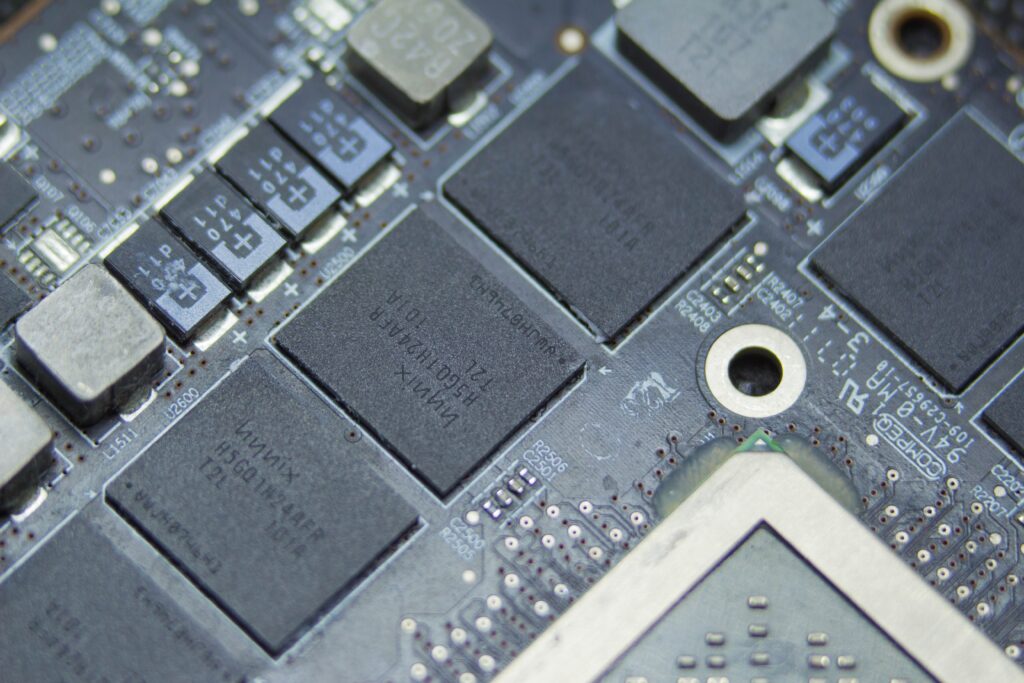

Video random access memory (VRAM), a specialized memory structure used in GPUs, enables fast data access and manipulation. The graphics processing unit (GPU) is capable of storing and retrieving large amounts of data required for computationally intensive applications such as scientific simulations, graphics rendering, and more thanks to this dedicated memory.

A graphics processing unit (GPU) is an essential piece of hardware for many different types of computer tasks, including AI, scientific research, digital content creation, and gaming. Its architecture has been fine-tuned to maximize parallelism, memory bandwidth and programmability.

Graphics Processing:

Processing and rendering visuals to the screen is one of the main functions of the GPU. Whether you’re immersed in a graphically intensive game, editing media, or simply navigating your computer’s GUI, the GPU is silently processing calculations and creating visuals in real time.

Table of Contents

Parallel Computing Power:

The ability to perform exceptionally well on activities that demand massive parallelization is a hallmark of graphics processing units (GPUs), distinguishing them from traditional central processing units (CPUs). Graphics processing units (GPUs) consist of thousands of small cores that can execute instructions on different data sets simultaneously, unlike central processing units (CPUs), which typically have a few strong cores for sequential processing.

Due to their inherent parallelism, GPUs are capable of running computationally complex operations at incredible speeds. The parallel processing capability of a GPU allows it to process large amounts of data and calculations simultaneously, significantly improving speed, whether it is rendering complex 3D graphics, training deep learning models, or scientific To execute the simulation.

Parallel computing on GPUs has the ability to break large computational problems into smaller, more manageable tasks that can be handled simultaneously on multiple cores, which is a huge advantage. By taking advantage of the massive parallelism of GPUs, this method speeds up calculations and gives better results than CPUs.

Additionally, GPUs are incredibly scalable, so you can use multiple GPUs in a single system to increase parallel processing capability even more. Because of this, it is possible to split the workload across multiple GPUs, leading to even greater performance gains. This is especially helpful for demanding applications such as high-performance computing and machine learning.

In short, the ability of GPUs to process complex tasks at unparalleled rate and efficiency is largely due to their architecture, which is based on parallel computing capability. With their unmatched parallel processing capabilities, GPUs are constantly pushing the boundaries of computing, whether it’s improving artificial intelligence, powering immersive gaming experiences, or accelerating scientific research.

Specialized Processing Units:

A graphics processing unit (GPU) is a collection of specialized processing units designed to do a particular job. Some examples of these shaders are compute shaders, which do general-purpose processing on GPU cores, pixel shaders, which render individual pixels, and vertex shaders, which modify the geometry of 3D objects. All of these components work together to make the GPU efficient and versatile, and they are vital to the rendering pipeline as a whole.

Conclusion:

What type of processor is a GPU: In conclusion, graphics processing units (GPUs) are a subgroup of general-purpose computers that excel at visual processing and parallel computing. Unlike traditional central processing units (CPUs), its design uses hundreds of small cores optimized for parallel computation. GPUs are an integral part of modern computing, fueling innovation and expanding the boundaries of what can be achieved, whether you’re training cutting-edge AI models, enjoying immersive gaming experiences, or Giving impetus to scientific research.